Whether you’re new to UX or a seasoned UX professional, usability testing is a critical component of your work. But what is usability testing? How is it different from user testing? And how do you conduct usability testing? This guide will answer these questions, and more. Here’s what I’ll cover:

- What is usability testing and why does it matter?

- Usability testing vs. user testing

- How to conduct usability testing

- Final thoughts

1. What is usability testing and why does it matter?

With the widespread adoption of user-centric design philosophy, evaluating designs with users becomes increasingly critical in ensuring the success of product experience. Usability testing is a tried-and-true method for evaluating designs directly involving users. It goes beyond simply asking people if they understand the system, and probes potential issues around ease of use of the system. Put simply, usability testing helps you locate improvements that will ensure the usability of your products is top notch.

Common sense tells us that people say one thing and do another. This is especially true in primary research, such as user surveys and interviews, where self-reported claims are often skewed or bias-ridden. Usability tests attempt to investigate user behavior without intervention. Subtle cues such as facial expressions, body language, and implicit emotional responses are noted down. It takes an observatory approach traditionally used in science labs.

2. Usability testing vs. user testing

In my experience as a product designer, one of the most common sources of confusion is the difference between user testing and usability testing. For product designers, these two are like apples and oranges.

User tests validate a solution by asking people “does this solution address your pain points?” Usability tests, on the other hand, evaluate how useful and usable a product is by observing how users interact with the system.

3. How to conduct usability testing

Now that we’ve established the need for usability tests, let’s go deeper into how to conduct effective tests. Much like a scientific lab test, a typical usability test follows a rigorous process of planning, recruiting participants, running the tests, analyzing data, and reporting your findings.

Step 1: Plan your test

Having a solid plan is a must for effective usability testing. In your plan, you will work with stakeholders — client, product manager, engineering team, marketing, etc. — to determine your test objectives. The more targeted your objective is, the better the outcome. For example, if you are redesigning an e-commerce website, you can test the ease of use and satisfaction of the redesigned purchase flow.

While the overall test objective can be abstract, designing a series of 5-10 user tasks to be carried out will help you evaluate interface interaction with concrete actions. In the example of the e-commerce site redesign, you can assign tasks that allow you to observe how users navigate the site, find specific items, add items to cart, enter credit card details, enter address info, etc.

Once you have the list of tasks to be tested, it’s time to put down metrics to track the success and failures of how users perform these tasks. First, you need to determine what is a critical error and a non-critical error, and what successful completion looks like for each task. In the test plan, reserve a place for tracking errors and completion for each user, so that you can analyze the completion rate and error frequency at the end of your test.

A sample one page test plan. Source: userfocus.co.uk

A sample one page test plan. Source: userfocus.co.uk

Finally, complete your plan with how and where the tests will be conducted, and expected date of completion. For one of my usability tests, I did a few in-person tests and a few remote moderated tests. Having a plan with designated time slots helped a lot in organizing various online and offline events.

Step 2: Recruit test participants

In addition to goal setting, your test plan also needs to outline how and when to recruit test participants. Budget your usability tests accordingly, because in most cases you will need to compensate test participants in some way. You might also need to budget for renting rest venues.

It’s the most effective to recruit those whose attributes match your target users. I always note down test participants’ demographic info to draw relevance to the rest of the business case.

When doing moderated tests, it’s also helpful to draft up a script for the moderator (especially when you are not the moderator). The script will outline how to introduce the prototype to test participants, the tasks to be completed, and how to ask follow-up questions at the end of the test.

If you are wondering what is the optimal number of participants to recruit, the Nielsen Norman Group has a comprehensive study on this subject. In short, a minimum of 5 participants is recommended for qualitative usability tests. More participants can add value to the test, but once the number of participants passes a certain threshold, it starts to yield diminishing returns.

Step 3: Run tests

Despite the fact that usability testing is largely observational, effectively moderating tests still plays a huge role in gaining high quality insights. A few techniques are used in moderated tests (see below), although each comes with its pros and cons.

Here are some techniques at a glance together with their pros and cons, as shown on usability.gov:

Concurrent Think Aloud (CTA)

Pros:

- Understand participants’ thoughts as they occur and as they attempt to work through issues they encounter.

- Elicit real-time feedback and emotional responses.

Cons:

- Can interfere with usability metrics, such as accuracy and time on task.

Retrospective Think Aloud (RTA)

Pros:

- Does not interfere with usability metrics

Cons:

- Overall session length increases

- Difficulty in remembering thoughts from up to an hour before = poor data

Concurrent Probing (CP)

Pros:

- Understand participants’ thoughts as they attempt to work through a task.

Cons:

- Interferes with natural thought process and progression that participants would make on their own if uninterrupted

Retrospective Probing (RP)

Pros:

- Does not interfere with usability metrics.

Cons:

- Difficulty in remembering = poor data

I personally tend to use a combination of concurrent think aloud with retrospective probing. To do that, I first introduce test participants to the context of the product and list of tasks I’d like them to perform. Participants are asked to think out loud as they work through the tasks. My role as a moderator is to refrain from interrupting them or offering clues even when they struggle, as well as to note down any real-time feedback and emotional responses.

After the test is finished, I go back to my notes and ask participants for their feedback based on what I have observed. This is the usability expert’s opportunity to uncover design opportunities.

For example, in my UX project for a grocery shopping site, I observed that, when given a choice between Facebook sign-in and signing in as a guest, one particular participant opted to sign in via Facebook. I asked her why she chose the Facebook sign-in option over the other. Thinking back on her choice, she answered “I’m lazy. I just wanted to do whatever is easiest.” That is valuable insight for design.

Also make sure to write down any likes, dislikes and recommendations voiced during retrospective probing. A simple question such as “do you have any feedback for us?” will do the trick.

It is important to record the audio of these tests, as well as the test screen, so the moderator can go back to the recordings after tests are done to catch any missed insights.

The same principles and techniques apply to remote moderated usability tests, and technology has made recording Remote Desktop usability tests very easy.

Remote usability testing for mobile is more challenging due to the lack of recording tools out there, but you can always think of creative workarounds. The Mailchimp team shared what they did to overcome the hurdle for real-time mobile screen sharing with creative use of webcam.

Step 4: Analyze and present test data

Once you have completed all planned tests, time to go back to your notes and research data to dig for insights. The power of insight lies in its ability to drive action. When your research findings are presented to your team, it helps to bridge the gap between knowledge and solution, as well as to reach a consensus when multiple design solutions seem to hold the same weight.

The answer usually comes directly from the user.

There are two types of data you can present from your usability tests: quantitative data from task completion, and qualitative data from synthesizing observational notes and direct user feedbacks.

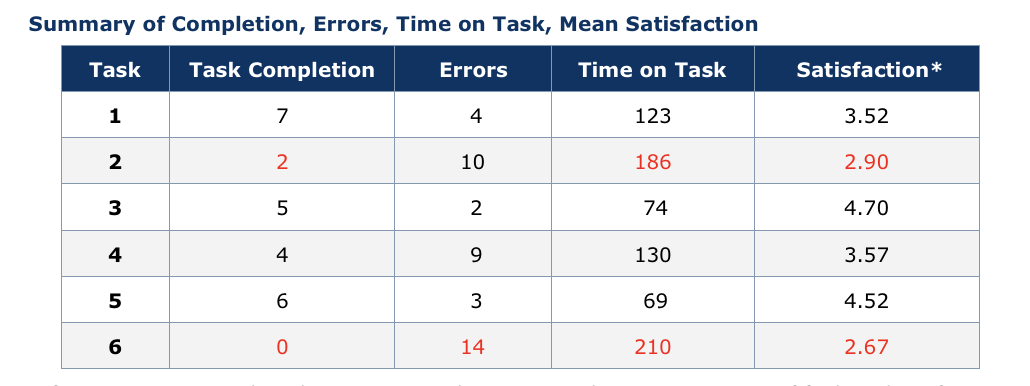

For quantitative data finding, a table that outlines the tasks, their level of criticality, successful completion rate, error rate, and occurrence is sufficient to make your case. At a glance, it will clearly communicate which usability issues are critical and urgently need attention, and which ones are less of an issue.

You can also include task time and satisfaction rating to support your findings.

Example of a usability test report. Source: usability.gov

For qualitative findings, present your data in a way that leads to design recommendations. Use direct quotes and observational notes to support your recommended solution. At the end of the day, the goal of your usability test is to identify issues and improve upon them with better designs.

4. Final thoughts

There you have it! Usability testing is one of my favourite UX methods. Sometimes, it is what makes a good design great. I hope this guide will help you in your next usability test!

If you’d like to learn more about usability testing, check out these other articles: