Data scientists use a wide variety of machine learning algorithms to find patterns in big data. They translate that data into practical insights for the organizations they work for. The key for data professionals is to learn how to pick the right algorithm for each problem.

One extremely useful algorithm is Random Forest—an algorithm used for both classification and regression tasks. Confused? Don’t worry, all will become clear!

In this guide, you’ll learn exactly what Random Forest is, how it’s used, and what its advantages are.

If you’d like to try out data analytics, then this free 5-day data short course is a great option.

In this article we’ll cover:

- What is Random Forest?

- How does the Random Forest algorithm work?

- What is Random Forest used for?

- What are the advantages of Random Forest?

- What are the disadvantages of Random Forest?

- Key takeaways

So: What on earth is Random Forest? Let’s find out.

1. What is Random Forest?

Random Forest is a powerful and versatile supervised machine learning algorithm that grows and combines multiple decision trees to create a “forest.” It can be used for both classification and regression problems in R and Python.

There we have a working definition of Random Forest, but what does it all mean? Before we explore Random Forest in more detail, let’s break it down:

- What do we mean by supervised machine learning?

- What are classification and regression?

- What are decision trees?

Understanding each of these concepts will help you to understand Random Forest and how it works. So let’s explain.

What is supervised machine learning?

Supervised machine learning is when the algorithm (or model) is created using what’s called a training dataset.

The model is trained using many different examples of various inputs and outputs, and thus learns how to classify any new input data it receives in the future. This is how algorithms are used to predict future outcomes.

What are regression and classification in machine learning?

In machine learning, algorithms are used to classify certain observations, events, or inputs into groups. For example, an email spam filter will classify each email as either “spam” or “not spam”.

However, the email example is just a simple one; within a business context, the predictive powers of such models can have a major impact on how decisions are made and how strategies are formed—but more on that later.

So: Regression and classification are both supervised machine learning problems used to predict the value or category of an outcome or result.

In classification analysis, the dependent attribute is categorical. Classification tasks learn how to assign a class label to examples from the problem domain. As mentioned previously, a common example of classification is your email’s spam filter.

In regression analysis, the dependent attribute is numerical instead. Regression is used when the output variable is a real or continuous value such as salary, age, or weight.

For a simple way to distinguish between the two, remember that classification is about predicting a label (e.g. “spam” or “not spam”) while regression is about predicting a quantity.

What are decision trees?

As we know, the Random Forest model grows and combines multiple decision trees to create a “forest.”

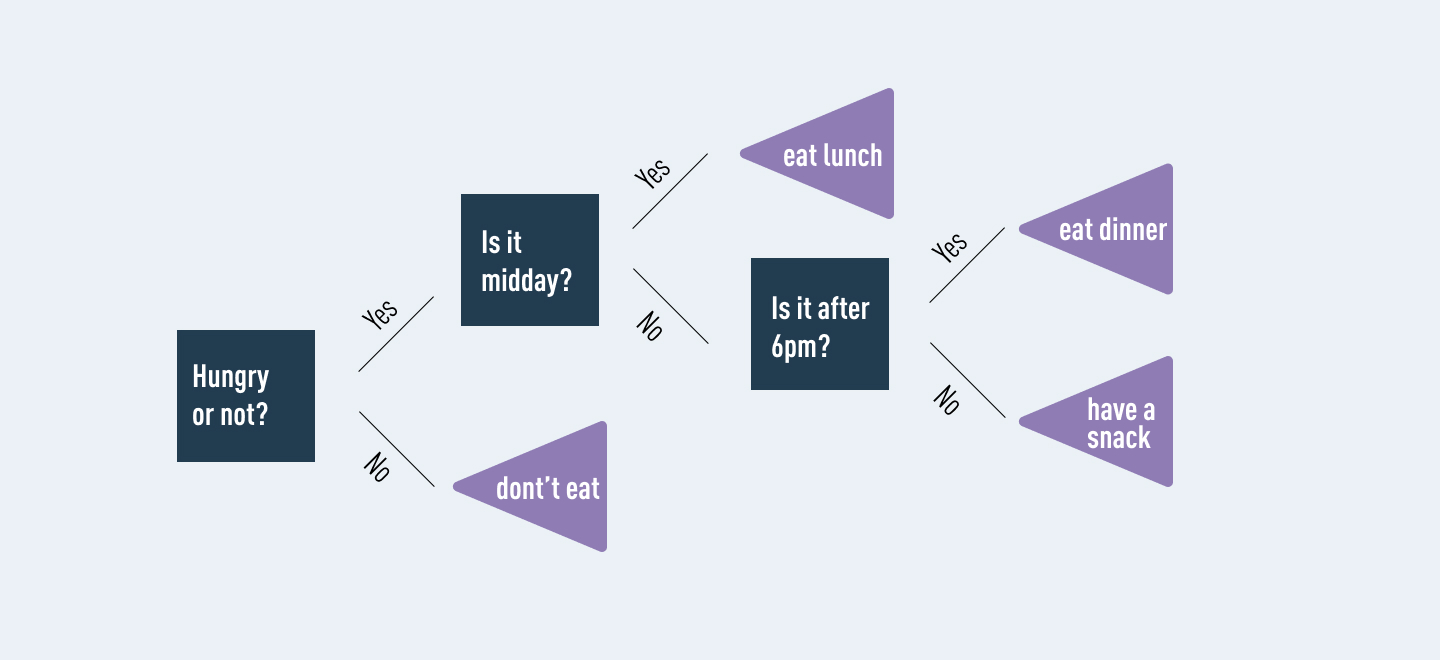

A decision tree is another type of algorithm used to classify data. In very simple terms, you can think of it like a flowchart that draws a clear pathway to a decision or outcome; it starts at a single point and then branches off into two or more directions, with each branch of the decision tree offering different possible outcomes.

You can learn more about decision trees and how they’re used in this guide.

An example of a simple decision tree

Classification is an important and highly valuable branch of data science, and Random Forest is an algorithm that can be used for such classification tasks.

Random Forest’s ensemble of trees outputs either the mode or mean of the individual trees. This method allows for more accurate and stable results by relying on a multitude of trees rather than a single decision tree.

It’s kind of like the difference between a unicycle and a four-wheeler!

2. How does the Random Forest algorithm work?

Random Forest grows multiple decision trees which are merged together for a more accurate prediction.

The logic behind the Random Forest model is that multiple uncorrelated models (the individual decision trees) perform much better as a group than they do alone. When using Random Forest for classification, each tree gives a classification or a “vote.” The forest chooses the classification with the majority of the “votes.” When using Random Forest for regression, the forest picks the average of the outputs of all trees.

The key here lies in the fact that there is low (or no) correlation between the individual models—that is, between the decision trees that make up the larger Random Forest model. While individual decision trees may produce errors, the majority of the group will be correct, thus moving the overall outcome in the right direction.

Are decision trees in Random Forest different from regular decision trees?

It’s easy to get confused by a single decision tree and a decision forest.

It seems like a decision forest would be a bunch of single decision trees, and it is… kind of. It’s a bunch of single decision trees but all of the trees are mixed together randomly instead of separate trees growing individually.

When using a regular decision tree, you would input a training dataset with features and labels and it will formulate some set of rules which it will use to make predictions. If you entered that same information into a Random Forest algorithm, it will randomly select observations and features to build several decision trees and then average the results.

For example, if you wanted to predict how much a bank’s customer will use a specific service a bank provides with a single decision tree, you would gather up how often they’ve used the bank in the past and what service they utilized during their visits. You would add some features that describe that customer’s decisions. The decision tree will generate rules to help predict whether the customer will use the bank’s service.

If you inputted that same dataset into a Random Forest, the algorithm would build multiple trees out of randomly selected customer visits and service usage. Then it would output the average results of each of those trees.

How are the trees in a Random Forest trained?

Decision trees in an ensemble, like the trees within a Random Forest, are usually trained using the “bagging” method.

The “bagging” method is a type of ensemble machine learning algorithm called Bootstrap Aggregation. An ensemble method combines predictions from multiple machine learning algorithms together to make more accurate predictions than an individual model. Random Forest is also an ensemble method.

Bootstrap randomly performs row sampling and feature sampling from the dataset to form sample datasets for every model.

Aggregation reduces these sample datasets into summary statistics based on the observation and combines them. Bootstrap Aggregation can be used to reduce the variance of high variance algorithms such as decision trees.

Variance is an error resulting from sensitivity to small fluctuations in the dataset used for training. High variance will cause an algorithm to model irrelevant data, or noise, in the dataset instead of the intended outputs, called signal. This problem is called overfitting. An overfitted model will perform well in training, but won’t be able to distinguish the noise from the signal in an actual test.

Bagging is the application of the bootstrap method to a high variance machine learning algorithm.

3. What is Random Forest used for?

Random forest is used on the job by data scientists in many industries including banking, stock trading, medicine, and e-commerce.

It’s used to predict the things which help these industries run efficiently, such as customer activity, patient history, and safety.

Random Forest is used in banking to detect customers who are more likely to repay their debt on time. It’s also used to predict who will use a bank’s services more frequently. They even use it to detect fraud. Talk about the robin hood of algorithms!

Stock traders use Random Forest to predict a stock’s future behavior. It’s used by retail companies to recommend products and predict customer satisfaction as well.

Scientists in China used Random Forest to study the spontaneous combustion patterns of coal to reduce safety risks in coal mines!

In healthcare, Random Forest can be used to analyze a patient’s medical history to identify diseases. Pharmaceutical scientists use Random Forest to identify the correct combination of components in a medication or predict drug sensitivity. Sometimes Random Forest is even used for computational biology and the study of genetics.

If you’d like to learn more about how Random Forest is used in the real world, check out the following case studies:

- Using Random Forests on Real-World City Data for Urban Planning in a Visual Semantic Decision Support System

- A real-world example of predicting Sales volume with Random Forest Regression on a Jupyter Notebook

4. What are the advantages of Random Forest?

Random Forest is popular, and for good reason! It offers a variety of advantages, from accuracy and efficiency to relative ease of use.

For data scientists wanting to use Random Forests in Python, scikit-learn offers a random forest classifier library that is simple and efficient.

The most convenient benefit of using random forest is its default ability to correct for decision trees’ habit of overfitting to their training set. Using the bagging method and random feature selection when executing this algorithm almost completely resolves the problem of overfitting which is great because overfitting leads to inaccurate outcomes. Plus, even if some data is missing, Random Forest usually maintains its accuracy.

Random forest is much more efficient than a single decision tree while performing analysis on a large database. On the other hand, Random Forest is less efficient than a neural network. A neural network, sometimes just called neural net, is a series of algorithms that reveal the underlying relationship within a dataset by mimicking the way that a human brain thinks.

Neural nets are more complicated than random forests but generate the best possible results by adapting to changing inputs. Unlike neural nets, Random Forest is set up in a way that allows for quick development with minimal hyper-parameters (high-level architectural guidelines), which makes for less set up time.

Since it takes less time and expertise to develop a Random Forest, this method often outweighs the neural network’s long-term efficiency for less experienced data scientists.

So, to summarize, the key benefits of using Random Forest are:

- Ease of use

- Efficiency

- Accuracy

- Versatility – can be used for classification or regression

- More beginner friendly than similarly accurate algorithms like neural nets

5. What are the disadvantages of Random Forest?

There aren’t many downsides to Random Forest, but every tool has its flaws.

Because random forest uses many decision trees, it can require a lot of memory on larger projects. This can make it slower than some other, more efficient, algorithms.

Sometimes, because this is a decision tree-based method and decision trees often suffer from overfitting, this problem can affect the overall forest.

This problem is usually prevented by Random Forest by default, because it uses random subsets of the features and builds smaller trees with those subsets. This can slow down processing speed but increase accuracy.

6. Key takeaways

So there you have it: A complete introduction to Random Forest. To recap:

- Random Forest is a supervised machine learning algorithm made up of decision trees

- Random Forest is used for both classification and regression—for example, classifying whether an email is “spam” or “not spam”

- Random Forest is used across many different industries, including banking, retail, and healthcare, to name just a few!

- Overall, Random Forest is accurate, efficient, and relatively quick to develop, making it an extremely handy tool for data professionals.

Did you enjoy learning about Random Forest? Want to learn more about the tools and techniques used by machine learning professionals?

CareerFoundry’s Machine Learning with Python course is designed to be your one-stop shop for getting into this exciting area of data analytics. Possible as a standalone course as well as a specialization within our full Data Analytics Program, you’ll learn and apply the ML skills and develop the experience needed to stand out from the crowd.

If you’d like to read more, check out the following articles: