In data analytics, regression and classification are both techniques used to carry out predictive analyses. But how do these models work, and how do they differ? Read on to find out.

For any data analyst, statistical skills are a must-have. One area where these skills come in particularly useful is in the field of predictive analytics. Emerging areas like machine learning and artificial intelligence are reliant on predictive analytics to identify patterns and predict trends. Two common algorithms used to solve these types of problems are regression and classification algorithms. However, each is suited to different types of predictive tasks. If you’re interested in breaking into machine learning and AI, you must learn to identify the difference between classification and regression problems.

In this post, we’ll explore regression and classification in more detail, including how they’re used for predictive modeling. We’ll ask:

- What is predictive analytics?

- What is classification?

- What is regression?

- Classification vs. regression: What’s the difference?

- In summary

Before diving deeper, let’s start with some background: predictive analytics.

1. What is predictive analytics?

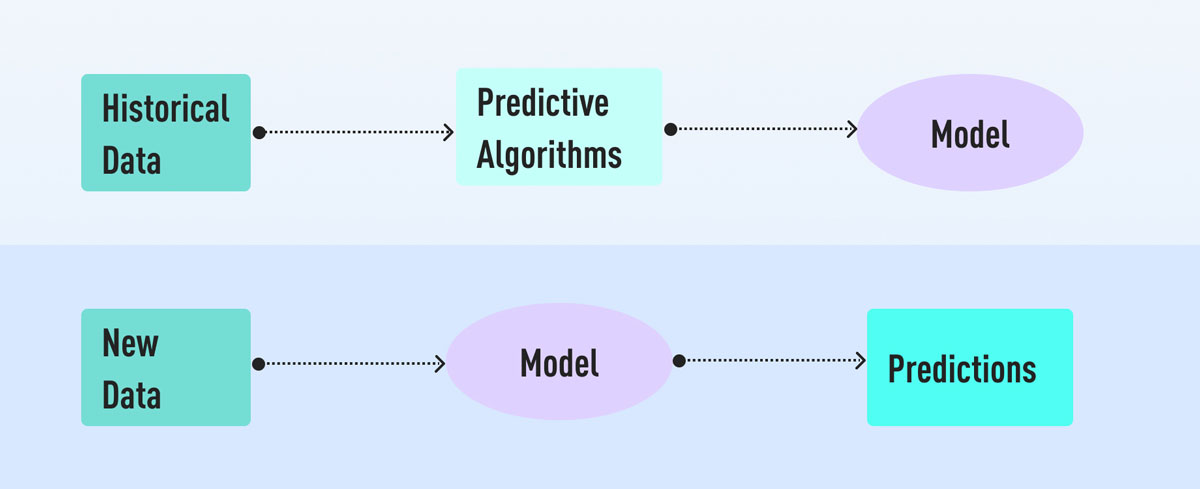

Predictive analytics is an area of data analytics that uses existing information to predict future trends or behaviors. This type of analysis applies to many areas of data analytics, but it is particularly prominent in the emerging fields of artificial intelligence and machine learning.

While there are many different ways of carrying out predictive tasks, all predictive models share certain qualities. To start with, they all rely on independent input (or ‘explanatory’) variables. These input variables are then used to infer, or predict, an unknown outcome (known as a dependent output variable).

Of course, when your job is to try and make predictions, it’s important to be as accurate as possible. The accuracy of predictive analytics relies on several factors. Firstly, predictive analytics often uses ‘training’ datasets. These datasets help guide an algorithm with existing patterns that are known to be correct. Naturally, the quality of these datasets affects the outcome. Other factors affecting accuracy include the depth of analysis and the assumptions made when programming the algorithm. Not surprisingly, the experience level of the data analyst solving the problem is also a key determining factor.

As you might already suspect, predictive analysis is not always straightforward! However, it has very powerful potential. For instance, in real estate, predictive analysis is used to estimate future house prices. Your email provider uses predictive analytics to determine whether or not incoming emails are spam. Predictive analytics is also commonly used in meteorology to predict the weather, to improve sales strategies in sectors like retail, and even to diagnose cancer. The uses are pretty much endless.

What we’ve described so far is the overall concept of predictive analytics. Beyond this, each type of task requires different tools, or models, to solve them. This is where algorithms come into play. Two algorithms commonly used in predictive analytics are classification and regression algorithms. Now we’ve covered the basics, let’s look at each one in more detail.

2. What is classification?

Classification is the task of predicting or identifying which category (or categories) an observation (or data point) belongs to. In classification problems, output variables (in this case, the predictions) are always discrete values. This means they can be placed into clear categories, such as ‘yes/no’, ‘spam/not spam,’ and so on.

Classification algorithms (the tools we use to solve classification problems) work by using input variables to create a mapping function. This is commonly achieved using training datasets. These data contain observations whose classifications are already known and so the algorithm can use them as a guide. This helps determine the output variables (or predictions) with varying degrees of accuracy.

What is an example of a classification problem?

A simple example of a classification problem in predictive analytics would be sorting a dataset of vegetables by color, based on their size, shape, and name. While there are several categories you can use, e.g. red, green, orange (and so on) there will always be a finite number of them to choose from.

Vegetables are a straightforward example. In reality, things get more complex. Yes, a classification algorithm might comfortably output variables into one of two classes (known as binary classification). However, it could just as easily output variables into more than two classes (known as multi-class classification—which is what our vegetable example would fall into). In instances when output variables fit into several classes (for instance, if a bell pepper had shades of green, yellow, and red at the same time and therefore fit into all three categories) we would call this multi-label classification.

How can you identify a classification problem?

It’s actually not that hard to identify a classification task. If you can realistically list all the possible values for a data point, then you have a classification problem. If not, it might be a regression task. Let’s look at that now.

3. What is regression?

Unlike classification, which places data into discrete categories, regression problems use input variables to identify continuous values. What do we mean by continuous values? Well, the target outcome of a regression algorithm will always be a quantity. Time-series data, sales figures, salaries, scores, heights, weights, and so on are all common output values for regression problems. Like classification, regression can also use training data sets.

What is an example of a regression problem?

An example of a regression problem would be determining the price of food crates based on factors like the quality of the contents, supply chain efficiency, customer demand, and previous pricing. In short, the outcome variable doesn’t fit into discrete categories. Instead, it’s a cost (on a sliding scale) that can’t be categorized.

However, just as with classification, things are more complex in reality. For instance, there are different types of regression models for different tasks. While linear regression seeks a correlation between one independent and one dependent variable, multiple linear regression predicts a dependent output variable based on two or more independent input variables (like our food crate example). There is a range of variations on these models. A data analyst’s job is to identify which model is the appropriate one to use and to tweak it accordingly.

How can you identify a regression problem?

If you cannot list all the possible output values (as with classification) then you likely have a regression problem. When the desired output variable is an integer, amount, figure, or size, it’s a good indicator that it’s probably a regression task. This is a good tip for quickly identifying the type of problem you’re faced with.

4. Classification vs. regression: What is the difference?

Given the seemingly clear distinctions between regression and classification, it might seem odd that data analysts sometimes get them confused. However, as is often the case in data analytics, things are not always 100% clear-cut. In this section, we’ll reaffirm the differences between classification and regression. We’ll then look at their similarities, which as you’ll learn, highlight their nuances much more clearly.

What’s the difference between classification and regression?

In short, the main difference between classification and regression in predictive analytics is that:

- Classification involves predicting discrete categories or classes.

- Regression involves predicting continuous, real-value quantities.

If you can distinguish between the two, then you’re halfway there. While we’ve mentioned this already, it’s an important point to hammer home. If you identify the problem wrongly, you’ll apply the wrong statistical techniques and may find yourself falling down a rabbit hole that’s hard to get out of!

What are the similarities between classification and regression?

The similarities between regression and classification are what makes it challenging to distinguish between them at times. Choose the wrong model for the task at hand, and it’ll hurt your analysis. With this in mind, let’s look at some of the similarities, so you know what to look out for.

Firstly, it may seem logical to assume that regression and classification problems use different algorithms. In fact, many algorithms, such as decision tree and random forest can be adapted for both classification and regression tasks.

Meanwhile, other models are only suited to one type of problem. For instance, linear regression can really only be used for regression tasks. To confuse matters further, logistic regression—which you might logically assume is a regression model—is actually a classification model. But that’s data analytics for you…always keeping us on our toes!

Another problem is that you cannot always easily distinguish regression and classification problems based on their input data. For instance, whether or not you have a regression or a classification task, the input data can either be discrete (e.g. sorted into categories or classes) or continuous (e.g. quantities).

The same problem goes for output data, too. For example, perhaps you’re aiming to predict house prices. Since house prices are continuous quantities, you might well assume that you’re working on a regression task. However, if the desired output is in the form of discrete classes, e.g. ‘cheap’, ‘affordable’ or ‘expensive’, or falls within a range, e.g. ‘from $200 to 299K’ or ‘from $300 to 399K’, you now have a classification task on your hands.

What’s more, a classification algorithm can sometimes output contiguous values, if these values are in the form of a probability that the data fall into a particular category. For example, if based on the data you have there’s a 34.6% chance that the vegetable you’re identifying is a carrot, this is still a classification problem despite having a contiguous figure as its output.

Last but not least, within the field of machine learning, regression and classification are both considered forms of supervised learning. This means that they both use existing data to make predictions, rather than seeking out patterns on their own.

Learning to spot these subtle similarities between classification and regression is key to choosing the right model for the problem you’re trying to solve. We strongly encourage you to familiarize yourself more with both types of problems by reading about the topic.

5. In summary

In this post, we’ve looked at the differences and similarities between regression and classification, with a focus on predictive analytics and machine learning. We’ve learned that:

- Regression and classification models both play important roles in the area of predictive analytics, in particular, machine learning and AI.

- Classification involves predicting discrete categories or classes (e.g. black, blue, pink)

- Regression involves predicting continuous quantities (e.g. amounts, heights, or weights)

- In some cases, classification algorithms will output continuous values in the form of probabilities.

- Likewise, regression algorithms can sometimes output discrete values in the form of integers.

- Certain algorithms can be used for both classification and regression tasks, while other algorithms can only be used for one task or the other.

- Learning to spot where regression and classification overlap is vital for determining which is the right model for solving a given problem.

It can take many years to fully grasp the complexities of regression and classification. But with this introduction under your belt, you should be ready to explore further. For example, find out how predictive modeling fits into the broader field of data analytics by trying our free, five-day data analytics short course. To discover more introductory topics relating to data analytics, check out the following posts: