Exploratory data analysis is one of the first steps in the data analytics process. In this post, we explore what EDA is, why it’s important, and a few techniques worth familiarizing yourself with.

Data analytics requires a mixed range of skills. This includes practical expertise, such as knowing how to scrape and store data. It also requires more nuanced problem-solving abilities, such as how to analyze data and draw conclusions from it. As a statistical approach, exploratory data analysis (or EDA) is vital for learning more about a new dataset.

Applied early on in the data analytics process, EDA can help you learn a great deal about a dataset’s inherent attributes and properties.

Interested in learning some data analytics skills? Try this free data short course out to see if you like it.

In this post, we’ll introduce the topic in more detail, answering the following questions:

- What is exploratory data analysis?

- Why is exploratory data analysis important?

- What are the underlying principles of exploratory data analysis?

- What are some techniques can you use for exploratory data analysis?

- In summary

First up, though…

1. What is exploratory data analysis?

In data analytics, exploratory data analysis is how we describe the practice of investigating a dataset and summarizing its main features. It’s a form of descriptive analytics.

EDA aims to spot patterns and trends, to identify anomalies, and to test early hypotheses. Although exploratory data analysis can be carried out at various stages of the data analytics process, it is usually conducted before a firm hypothesis or end goal is defined.

In general, EDA focuses on understanding the characteristics of a dataset before deciding what we want to do with that dataset.

Exploratory data analytics often uses visual techniques, such as graphs, plots, and other visualizations. This is because our natural pattern-detecting abilities make it much easier to spot trends and anomalies when they’re represented visually.

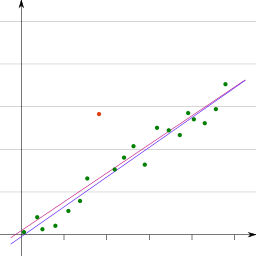

As a simple example, outliers (or data points that skew a trend) stand out much more immediately on a scatter graph than they do in columns on a spreadsheet.

Source: Indoor-Fanatikerderivative work: Indoor-Fanatiker, CC0, via Wikimedia Commons

In the image, the outlier (in red) is immediately clear. Even if you’re new to data analytics, this approach will seem familiar. If you ever plotted a graph in math or science at school in order to infer information about a dataset, then you’ve carried out a basic EDA.

The American mathematician John Tukey formally introduced the concept of exploratory data analysis in 1961. The idea of summarizing a dataset’s key characteristics coincided with the development of early programming languages such as R and S. This was also a time when scientists and engineers were working on new data-driven problems related to early computing.

Since then, EDA has been widely adopted as a core tenet of data analytics and data science more generally. It is now considered a common—indeed, indispensable—part of the data analytics process.

Want to try your hand at exploratory data analysis? Try this free, practical tutorial on exploratory data analysis as part of our beginner’s short course. You’ll calculate descriptive statistics for a real dataset, and create pivot tables.

2. Why is exploratory data analysis important?

At this stage, you might be asking yourself: why bother carrying out an EDA?

After all, data analytics today is far more sophisticated than it was in the 1960s. We have algorithms that can automate so many tasks. Surely it’s easier (and even preferable) to skip this step of the process altogether?

In truth, it has been shown time and again that effective EDA provides invaluable insights that an algorithm cannot. You can think of this a bit like running a document through a spellchecker versus reading it yourself. While software is useful for spotting typos and grammatical errors, only a critical human eye can detect the nuance.

An EDA is similar in this respect—tools can help you, but it requires our own intuition to make sense of it. This personal, in-depth insight will support detailed data analysis further down the line.

Specifically, some key benefits of an EDA include:

Spotting missing and incorrect data

As part of the data cleaning process, an initial data analysis (IDA) can help you spot any structural issues with your dataset.

You may be able to fix these, or you might find that you need to reprocess the data or collect new data entirely. While this can be a nuisance, it’s better to know upfront, before you dive in with a deeper analysis.

Understanding the underlying structure of your data

Properly mapping your data ensures that you maintain high data quality when transferring it from its source to your database, spreadsheet, data warehouse, etc. Understanding how your data is structured means you can avoid mistakes from creeping in.

Testing your hypothesis and checking assumptions

Before diving in with a full analysis, it’s important to make sure any assumptions or hypotheses you’re working on stand up to scrutiny.

While an EDA won’t give you all the details, it will help you spot if you’re inferring the right outcomes based on your understanding of the data. If not, then you know that your assumptions are wrong, or that you are asking the wrong questions about the dataset.

Calculating the most important variables

When carrying out any data analysis, it’s necessary to identify the importance of different variables.

This includes how they relate to each other. For example, which independent variables affect which dependent variables? Determining this early on will help you extract the most useful information later on.

Creating the most efficient model

When carrying out your full analysis, you’ll need to remove any extraneous information. This is because needless additional data can either skew your results or simply obscure key insights with unnecessary noise.

In pursuit of your goal, aim to include the fewest number of necessary variables. EDA helps identify information that you can extract.

Determining error margins

EDA isn’t just about finding helpful information. It’s also about determining which data might lead to unavoidable errors in your later analysis.

Knowing which data will impact your results helps you to avoid wrongly accepting false conclusions or incorrectly labeling an outcome as statistically significant when it isn’t.

Identifying the most appropriate statistical tools to help you

Perhaps the most practical outcome of your EDA is that it will help you determine which techniques and statistical models will help you get what you need from your dataset.

For instance, do you need to carry out a predictive analysis or a sentiment analysis? An EDA will help you decide. You can learn about different types of data analysis in this guide.

As is hopefully clear by now, intuition and reflection are key skills for carrying out exploratory data analysis. While EDA can involve executing defined tasks, interpreting the results of these tasks is where the real skill lies.

3. What are the underlying principles of exploratory data analysis?

Now we know what exploratory data analysis is and why it’s important, how exactly does it work?

In short, exploratory data analysis considers what to look for, how to look for it, and, finally, how to interpret what we discover. At its core, EDA is more of an attitude than it is a step-by-step process. Exploring data with an open mind tends to reveal its underlying nature far more readily than making assumptions about the rules we think (or want) it to adhere to.

In data analytics terms, we can generally say that exploratory data analysis is a qualitative investigation, not a quantitative one. This means that it involves looking at a dataset’s inherent qualities with an inquisitive mindset. Usually, it does not attempt to make cold measurements or draw insights about a dataset’s content. This comes later on.

You’d be forgiven for thinking this sounds a bit esoteric for a scientific field like data analytics! But don’t worry. There are some practical principles to exploratory data analysis that can help you proceed. A key one of these is known as the five-number summary.

What is the five-number summary?

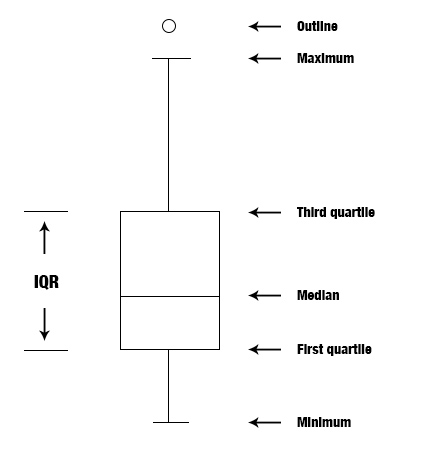

The five-number summary is a set of five descriptive statistics. Simple though these are, they make a useful starting point for any exploratory data analysis.

The aim of the five-number summary is not to make a value judgment on which statistics are the most important or appropriate, but to offer a concise overview of how different observations in the dataset are distributed. This allows us to ask more nuanced questions about the data, such as ‘why are the data distributed this way?’ or ‘what factors might impact the shape of these data?’ These sorts of questions are vital for obtaining insights that will help us determine the goals for our later analysis.

The five-number summary includes the five most common sample percentiles:

- The sample minimum (the smallest observation)

- The lower quartile (the median of the lower half of the data)

- The median (the average / middle value)

- The upper quartile (the median of the upper half of the data)

- The sample maximum (the largest observation)

The lower and upper quartiles are essentially the median of the lower and upper halves of the dataset. These can be used to determine the interquartile range, which is the middle 50% of the dataset.

In turn, this helps describe the overall spread of the data, allowing you to identify any outliers. These five statistics can be easily shown using a box plot.

Source: Dcbmariano, CC BY-SA 4.0 via Wikimedia Commons

The five-number summary can be used to determine a great number of additional attributes about a given dataset. This is why it is such a foundational part of data exploration.

To make matters easier, many programming languages, including R and Python, have inbuilt functions for determining the five-number summary and producing the corresponding box plots.

4. What are some techniques you can use for exploratory data analysis?

As we’ve already explained, most (though not all) EDA techniques are graphical in nature.

Graphical tools, like the box plot described previously, are very helpful for revealing a dataset’s hidden secrets. What follows are some common techniques for carrying out exploratory data analysis. Many of these rely on visualizations that can be easily created using tools like R, Python, S-Plus, or KNIME, to name a few popular ones.

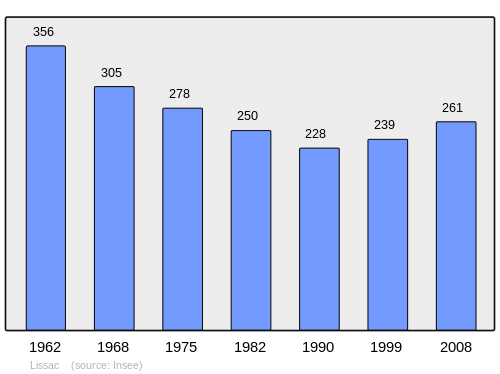

Univariate analysis

Source: Michiel1972, CC BY-SA 3.0 via Wikimedia Commons

Univariate analysis is one of the simplest forms of data analysis. It looks at the distribution of a single variable (or column of data) at a time.

While univariate analysis does not strictly need to be visual, it commonly uses visualizations such as tables, pie charts, histograms, or bar charts.

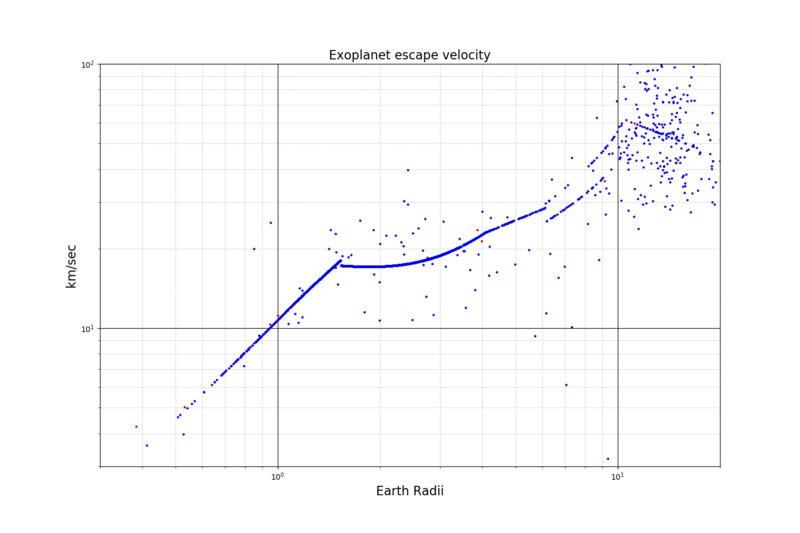

Multivariate analysis

Source: Public domain via Wikimedia Commons

Multivariate analysis looks at the distribution of two or more variables and explores the relationship between them. Most multivariate analyses compare two variables at a time (bivariate analysis).

However, it sometimes involves three or more variables. Either way, it is good practice to carry out univariate analysis on each of the variables before doing a multivariate EDA.

Any plot or graph that has two or more data points can be used to create a multivariate visualization (for example, a line graph that plots speed against time).

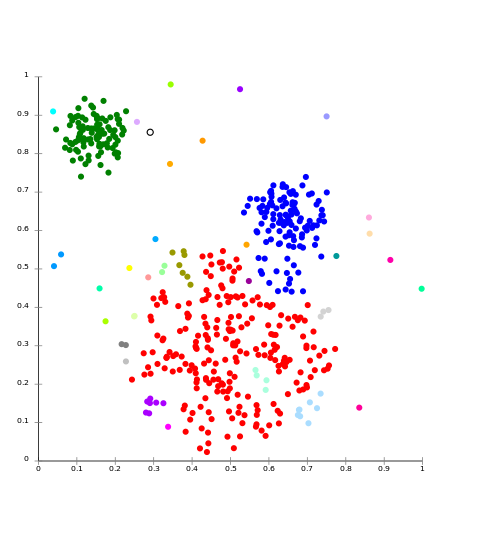

Classification or clustering analysis

Source: Chire, CC BY-SA 3.0 via Wikimedia Commons

Clustering analysis is when we place objects into groups based on their common properties.

It is similar to classification. The key difference is that classification involves grouping items using explicit, predefined classes (e.g. categorizing a dataset of people based on a range of their heights).

Clustering, meanwhile, involves grouping data based on what they implicitly tell us (such as whether someone’s height means they are highly likely, quite likely, or not at all likely to bang their head on a doorframe!)

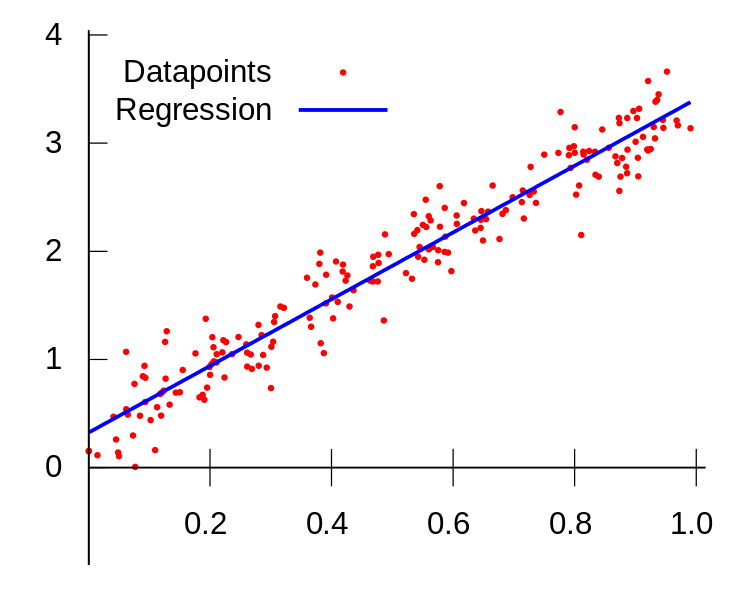

Predictive analysis

Source: Berland, Public domain, via Wikimedia Commons

Although predictive analysis is commonly used in machine learning and AI to make (as the name suggests) predictions, it’s also popular for EDA.

In this context, it doesn’t always refer to uncovering future information but simply using predictive methods—such as linear regression—to find unknown attributes (for example, using existing data to infer the values for gaps in historical data).

These represent just a small handful of the techniques you can use for conducting an EDA. But they hopefully offer a taste of the kinds of approaches you can take when trying to better understand a dataset.

5. In summary

In this post, we’ve introduced the topic of exploratory data analysis, why it’s important, and some techniques you might want to familiarize yourself with before carrying one out. We’ve learned that exploratory data analysis:

- Summarizes the features and characteristics of a dataset.

- Is a philosophy, or approach, rather than a defined process.

- Often draws out insights using visualizations, e.g. graphs and plots.

- Is important for spotting errors, checking assumptions, identifying relationships between variables, and selecting the right data modeling tools.

- Builds on the five-number summary, namely: the sample minimum and maximum, the lower and upper quartile, and the median.

- Employs various techniques, such as univariate and multivariate analysis, clustering, and predictive analytics, to name a few.

To learn more about exploratory data analysis or to put it into context within the broader data analytics process, try our free, five-day data analytics short course. Otherwise, for more introductory data analytics topics, check out the following posts: